Lunar-G2R: Geometry-to-Reflectance Learning

for High-Fidelity Lunar BRDF Estimation 🌘

Clémentine Grethen1,

Nicolas Menga2,

Roland Brochard2,

Simone Gasparini1,

Géraldine Morin1,

Jérémy Lebreton2,

Manuel Sanchez-Gestido3

1IRIT, University of Toulouse, France ·

2Airbus Defence & Space, France ·

3ESA ESTEC, Noordwijk, The Netherlands

Abstract

High-fidelity rendering of lunar surfaces is essential for simulation, perception, and

vision-based navigation, yet current pipelines rely on simplified or spatially uniform

reflectance models. We introduce Lunar-G2R, a neural framework that estimates

spatially varying BRDF parameters directly from lunar digital elevation models (DEMs).

The predicted per-pixel reflectance maps enable physically based renderings that more

closely match real orbital observations than classical analytical models such as Hapke.

Lunar-G2R is trained using real lunar imagery through a differentiable rendering formulation,

allowing reflectance to be learned from geometry alone.

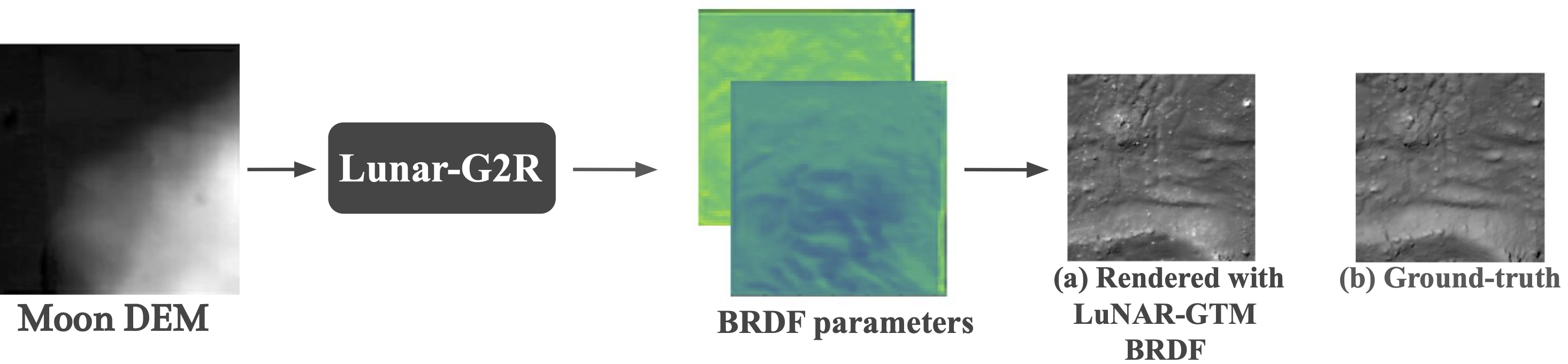

Lunar-G2R Inference Overview

Lunar-G2R takes as input a lunar digital elevation model and predicts

dense, spatially varying BRDF parameter maps defined at each surface location. Each DEM

pixel is assigned a local reflectance model, capturing fine-scale appearance variations

that are not represented by spatially uniform photometric models. These SVBRDF maps can

then be injected into a physically based renderer to synthesize realistic lunar images

under arbitrary illumination and viewing conditions.

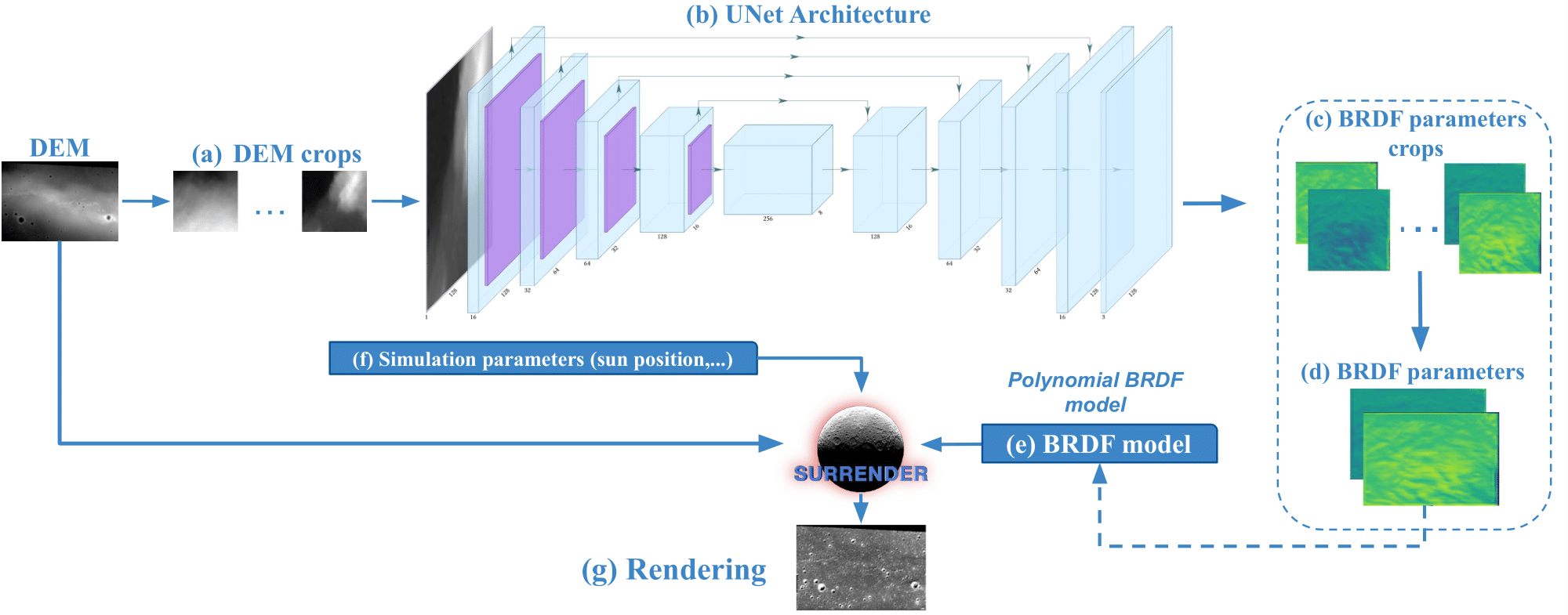

Lunar-G2R Training Overview

Lunar-G2R is trained using a differentiable rendering strategy that couples a neural

SVBRDF predictor with physically based image formation. DEM tiles are processed by a

U-Net architecture that predicts polynomial BRDF parameters at each spatial location.

The DEM is rendered under the real acquisition geometry—camera pose and solar illumination—

using the predicted reflectance parameters, and the resulting synthetic image is compared

to the corresponding orbital observation.

The photometric error is back-propagated through the renderer to supervise learning,

enabling reflectance properties to be learned directly from terrain geometry using real

lunar imagery, without controlled lighting, multi-view acquisition, or specialized

reflectance measurement setups.

Training Dataset

The training of Lunar-G2R relies on a large-scale dataset pairing real lunar orbital imagery

with high-resolution digital elevation models and precise acquisition metadata.

Each training sample associates a local DEM tile with a corresponding LRO image

acquired under known viewing and illumination conditions.

The dataset covers more than 80,000 DEM–image pairs, sampled over the Tycho

crater region, and is designed to ensure sufficient photometric diversity for BRDF

observability.

All samples are geographically split to avoid spatial leakage between training and evaluation.

The full training dataset is publicly released to support reproducibility and future research.

StereoLunar V2 Dataset

In addition to the training dataset, we release StereoLunar V2, an enhanced

version of the StereoLunar dataset in which lunar images are rendered using the

spatially varying BRDFs estimated by Lunar-G2R instead of a spatially uniform Hapke model.

By injecting learned SVBRDF parameters into a physically based rendering pipeline,

StereoLunar V2 provides more realistic photometric behavior, better reproducing

local brightness variations observed in real lunar imagery.

This dataset is designed to support downstream tasks such as stereo reconstruction,

multi-view geometry, and vision-based navigation under realistic lunar conditions.

.png)